The Role of Language Models in Simulating the Unconscious Mind

If you take a pre-trained LLM text model and just start it generating text, it will begin "dreaming". Meaning, it will generate, for example:

HTML for a correct-looking (but non-existent) Amazon product page.

Correct-looking (but non-existent) Shakespearean dialogue.

Correct-looking, but purely-imagined chat history.

It other words, it dreams. One key intuition I want to convey in this article is that LLMs don't just hallucinate some of the time. Rather, they hallucinate all of the time. That's what they do. That's all they do. That's the nature of the invention. It’s a dreaming machine.

Whether or not that dream is accurate to reality is impossible for a language model to ascertain, since its function is purely just to make predictions about the next word, based on the previous words. This is not a failing of language models, but is in fact the very nature of the invention: a dreaming machine.

If we want to ground the output from the LLM in reality, then we have to use "layer 2" components: tool-calling. The LLM must be informed of the tools at its disposal, and it must understand the distinction between "making up an answer off the top of your head" versus "actually calling the tool to see the actual result".

It's perfectly fine to say, "Off the top of my head, I'd say that's probably a good way to go." Or "Off the top of my head, I think probably those fishes will prefer the smaller worms over the bigger ones" (for example). These hypothetical answers can be useful for retrieval. (See the HyDE paper).

But it can also be very useful to call a tool, to get back an actual result, and then use the tool output as an input to the LLM for generating some final answer. In this way, language models can be grounded to reality.

Experiences are like notifications in the stream of consciousness

Sensations simply "occur" whether we like it or not - in real time. We perceive them. They happen and they keep happening. There is a constant stream of these coming in from our senses, due to our embodiment. They get recorded into episodic memory. The stream is available in working memory as a constant "stream of consciousness" of our conscious experience. Some experiences are more painful or pleasurable than others, and thus cause more powerful reactions and form more vivid memories than others.

Tool Use and Observations

In contrast to sensations, which simply occur, we choose to make observations. That is, we choose to call a tool at our disposal, in order to give the output from that tool to the LLM to generate the final answer.

This grounds the model into reality, since the tool output is placed into the input context for the language model, and we all know that the output generated by an LLM is powerfully influenced by what is placed in the input context.

If the input context contains "Why did the chicken", we naturally expect the next predicted word to be "cross". And If that word "cross" is added to the input context (which now contains "why did the chicken cross") we expect the next predicted word to be "the" and the next word to be "road" and so on.

Continuing on this vein: If you run a large language model in an isolated environment, and you ask it: “What is the current temperature in San Diego?” The model normally will just hallucinate the answer: “The temperature in San Diego is currently a balmy 65 degrees!” (It is making up the answer, because that’s all a language model can ever do: predict the next word, and the next word, etc! It’s hallucinating all the time!)

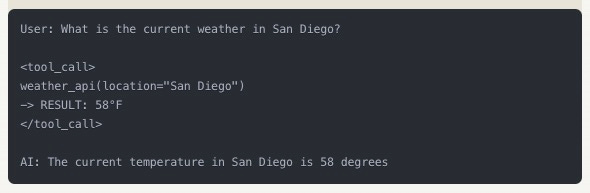

But, let’s say that the model is instructed that it has a tool for checking the weather by city. So anytime it is asked about the weather, it knows that, instead of answering off the top of its head, it should first use its tool to find out the answer. In that circumstance, the model calls its “weather” tool, passing it “San Diego”, and the tool returns back “58 degrees” from a web API call.

That answer from the weather tool is placed in the model’s input context before it generates the final answer:

I’m sure you can imagine that if you pass the above input to a language model, the output that it generates will be something like this: “The current weather in San Diego is 58 degrees”

Notice that the language model is hallucinating either way. But the answer it provides now is an accurate 58 degrees (returned from the tool call) instead of a purely invented (“65 degrees”) off the top of its head.

LLMs are the answer to that philosophical question, Do Androids Dream of Electric Sheep? The answer is yes, they do.

The Power of Recognized Dreams

Understanding language models as dreaming machines isn't just a theoretical insight - it has practical implications for how we build and use AI systems. When we recognize that a language model is always dreaming, we can be more intentional about when to let it dream freely and when to ground it through tools and experiences.

Sometimes we want the dreams - when brainstorming ideas, exploring possibilities, or engaging in creative tasks. The model's ability to generate plausible content based on patterns can be incredibly valuable in these contexts. It's perfectly fine to say "Off the top of my head, I think..." when we're exploring possibilities rather than stating facts.

Other times we need reality - when making important decisions, providing factual information, or taking actions in the physical world. In these cases, we need to ground the model's dreams through tools and experiences. The model's role then becomes interpreting and responding to real information rather than generating plausible but potentially incorrect content.

Implications for AGI

This understanding of language models as dreaming machines has profound implications for AGI development. It suggests that we shouldn't try to force language models to be something they're not - they are generators of possibilities, not arbiters of truth. Instead, we should embrace their dreaming nature while building appropriate frameworks for grounding their dreams in reality.

This mirrors human intelligence in many ways. We don't rely solely on our unconscious mind's generations - we check facts, we use tools, we learn from experience. But neither do we rely solely on direct observation - we imagine, we extrapolate, we dream. True intelligence requires both capabilities, working in harmony.